Man Versus Machine: The Truth About AI In Interviewing

There was a time when interviews were about two things: figuring out if someone could do the job, and whether you could stand to share a Slack channel with them. Now?

Now, our evaluation criteria has become a bit more esoteric: things like whether they blink too much, smile too little, or fall outside a statistical model trained on “proprietary data” that’s almost certainly pirated intellectual property.

Welcome to hiring in the age of the algorithm, where we’ve replaced gut instinct and basic decency with webcam recordings, acoustic patterning, and chatbots who “just want to get to know you better.”

Which is only kind of creepy.

Nobody asked for this. Especially not candidates. And definitely not hiring managers, who actually enjoy the one part of recruiting that doesn’t involve updating the ATS while pretending your HRBP is going to read the notes.

If Interviews Are Broken, Why Do They Still Work?

There’s this magical thinking in HR tech that if we can just build the perfect model, we’ll finally fix the interview. That the reason hiring keeps breaking down isn’t because job descriptions are made up, interviewers are untrained, or hiring goals change three times mid-search. It must be the format.

So we fix it, not by training people or investing in better hiring manager enablement, which would be, you know, a logical approach, but instead by feeding some white labeled LLM the last 10,000 hiring decisions we made and hoping it doesn’t just scale up all our old mistakes faster, and with slightly better branding.

The result: fewer conversations, more dashboards, a shift in focus from personalization to standardization, from open ended to highly structured and from screening for soft skills to filling out scorecards.

But here’s the problem. Real people don’t like talking to robots. If you’ve ever yelled “speak to an agent” on an automated phone system, or used a D2C chatbot, you probably already knew that.

Thing is, candidates are not shy about sharing their disdain for AI interviews, which aren’t really interviews so much as prescreens conducted by non-sentinent beings incapable of offering empathy or picking up on even basic social cues. If you’re OK with that, save yourself some time and money and delegate your interviewing responsibilities to any HR Generalist who’s SHRM certified.

If you’re part of an enterprise HR team, chances are you’ve already got more than enough robots working in your talent org to justify getting another one.

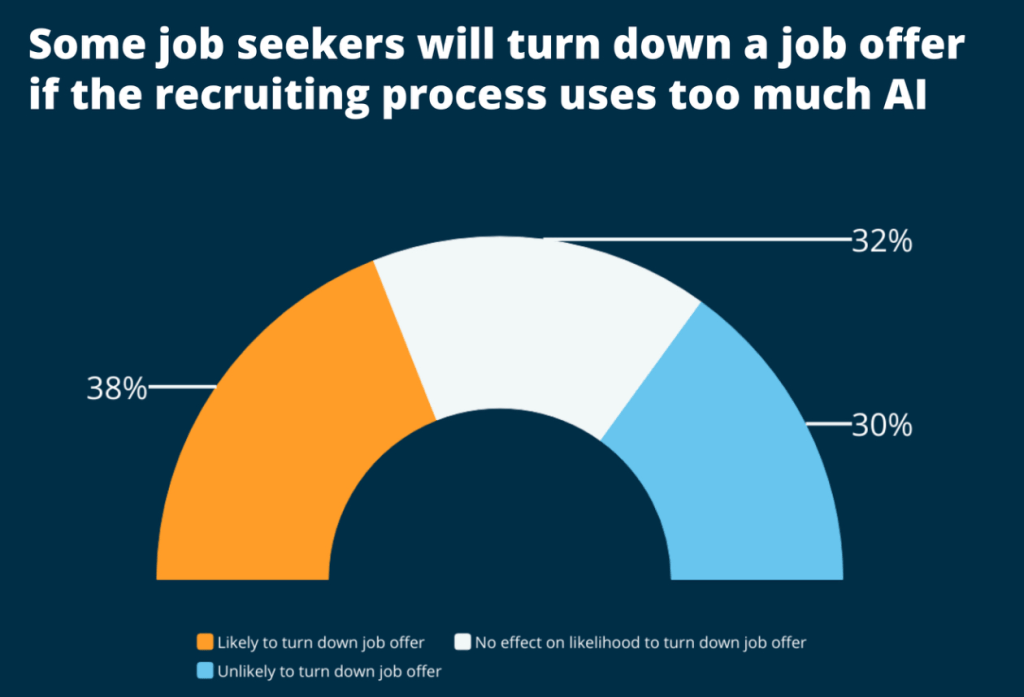

In fact, a peer reviewed 2025 study found that candidates were significantly less likely to apply for jobs that required AI-based interviews. And who can blame them?

If candidate experience really matters, then we’re reverse engineering any gains we might have made by adding unnecessary friction to an already onerous application process.

Because we all know there’s nothing candidates love more than going from wasting an hour completing a job application via Taleo to an equally anachronistic avatar ready to waste even more of your time.

But hey – you can give her a fun Australian accent and add your company branding in the corner, so that’s kinda fun. FML. Here’s the thing: the moment AI entered the interview process, perceived fairness tanked, and so did applicant interest.

The researchers tied this to lowered expectations of procedural justice and diminished trust in employers’ decision-making processes.

Translation: people don’t trust bots to evaluate them fairly. And, frankly, why should they?

Nothing Says “Culture Fit” Like Talking to a Cartoon Bot

The interview is the last part of hiring that still allows for spontaneity. Someone might surprise you, or say something unscripted, or light up in a way that makes you think, “Maybe this person’s got something.”

That vibe? It doesn’t show up in scoring rubrics.

In a recent study of job seekers in India, participants said they felt heightened anxiety, pressure to perform perfectly, and fear that any nervous tic or deviation from a perceived norm would get them flagged. They weren’t answering questions so much as trying to survive them.

Another study found that AI interfaces encouraged candidates to alter how they presented themselves. More filtering. More faking. Less candor. And honestly? Totally defeating the entire point of conducting an interview in the first place.

So yes, the tech might speed things up. But what you gain in velocity, you lose in authenticity.

AI-led interviews promise scale, speed, and structure. But if all you’re doing is automating the most superficial parts of the process, what are you actually improving?

According to research published in a 2025 Humanities and Social Sciences study, candidates rated AI interviews as less fair, less attractive, and less desirable than human ones. When AI was perceived as evaluating personality or behavior instead of just screening for skills, applicants disengaged.

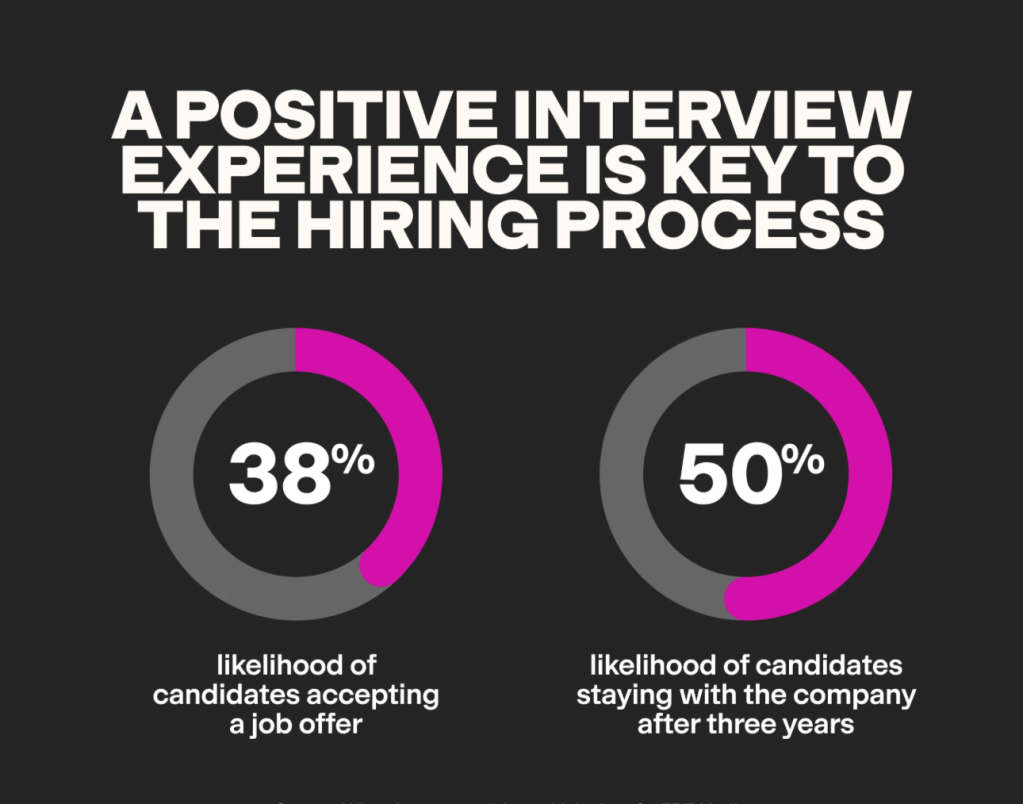

It also shows up in the offer stage. Another study in the American Journal of Social Psychology found that candidates who had human-led interviews were more likely to accept offers than those who went through AI assessments, even when compensation and job level were the same.

Why? Because candidates associate human interviews with trust, empathy, and warmth. AI interviews? Not so much.

Efficiency is great. But efficiency without insight is just rejection at scale.

AI interviews don’t reward originality. They reward conformity. They reward candidates who understand what the system is optimizing for. That’s not authenticity. That’s auditioning for a role in a workplace sitcom that probably gets canceled halfway through onboarding.

In a preprint study, researchers tested different avatars and styles for AI bots in mock interviews. Male, female, light-skinned, dark-skinned, upbeat, neutral, whatever. Turns out, it didn’t matter.

Candidates consistently reported lower trust, less engagement, and more anxiety across the board. The problem wasn’t how the bots looked. It was the fact they were bots at all.

We’ve built systems where being yourself is a liability, and in doing so, have made interviewing an almost entirely irrelevant part of how we hire today.

Deep Fakes, Real Consequences

Look. AI can be super helpful. It can schedule things. It can fix grammar in job descriptions. It can summarize interviews after the fact. But it shouldn’t be conducting them – and we’re likely a test case away from case law agreeing with this point.

Ultimately, once you remove the human element from interviews, you remove the element of surprise, too.. You remove empathy. You remove the very things that make interviews matter in the first place.

Also: any good recruiter knows that half of the point of an interview is to start pre-closing the candidate as early as possible, and anticipating any objections or pushback that may preempt that offer from being accepted..

And when you start using machines to decide who gets access to opportunity, you’re not just changing the process. You’re reshaping the entire point of the process. And you aren’t removing bias from the hiring process, you’re just replacing it with algorithmic bias, instead.

But let’s be clear: the entire point of an interview is to facilitate bias. Not in the pejorative sense – but in the practical one, to develop a preference for one candidate over another based on what can best be described as the intersection of professional experience and personal chemistry.

It’s been said that the only real question anyone needs to know from an interview is, “can I tolerate this person enough to be around them more than half of my waking life?”

In political terms, it’s the proverbial Beer Test: most voters ultimately choose the candidate they’d most want to have a beer with, and hiring managers select candidates based primarily off of the same sentiment.

And let’s face it: AI just isn’t that much fun at company happy hours.

Recruiting Roundtable: Come for the Content, Stay for the Controversy

If this sounds familiar, or if your HR tech stack is now actively undermining the one thing recruiters were actually good at, you won’t want to miss Bot or Not: The Truth About AI in Interviewing on September 30, presented in partnership with Humanly.

There aren’t going to be any product pitches. Josh Bersin will not be cited. And unlike most vendor sponsored events, the hosts actually know the difference between AI and automation, which will be a nice change of pace from most vendor webinars these days.

Oh, and there’s going to be plenty of debate, since I’ll be joined by Erika Oliver, an industry analyst who has built enough products to call out my BS, and Prem Kumar, the CEO of Humanly, one of my favorite people in the industry – and one of the smartest, too. PS: he just released an AI interviewing product that’s worth checking out, too. And I’m not just saying this because they’re sponsoring the round table.

So get that popcorn ready, and join us for an honest conversation about how we got here, where we’re going and what every HR and TA pro needs to know about AI and interviewing. This is one recruiting event you won’t want to miss – and one that’s actually going to be entertaining, too.

Can’t make it? No worries. Sign up and we’ll send the recording and a deck straight to your inbox. Promise.

Pingback: Unplugged: Insights from a Decade of RecFest | Snark Attack

Pingback: AI or Alibi: Judgement Day for Knowledge Workers? | Snark Attack